”Extended reality is turning cancer research into a team sport”

Interview:

Ingeborg Morawetz, MA

Sie sind bereits registriert?

Loggen Sie sich mit Ihrem Universimed-Benutzerkonto ein:

Sie sind noch nicht registriert?

Registrieren Sie sich jetzt kostenlos auf universimed.com und erhalten Sie Zugang zu allen Artikeln, bewerten Sie Inhalte und speichern Sie interessante Beiträge in Ihrem persönlichen Bereich

zum späteren Lesen. Ihre Registrierung ist für alle Unversimed-Portale gültig. (inkl. allgemeineplus.at & med-Diplom.at)

From patient communication to the vision of a digital tumour board: theXR Tumour Evolution Project (XRTEP) is a unique, real-world application of design in cancer research. It is enabled by a rare inter-disciplinary collaboration between architects, cancer clinicians, scientists, and technologists. How did the project emerge? And what can it teach us about the future of cancer research?

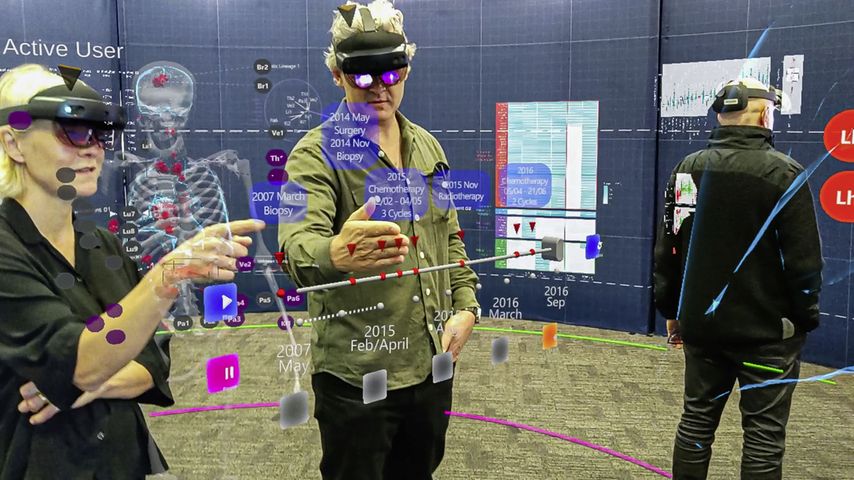

In an arena walled by white panels people gather. They move around, brush their hands through the air and point at things invisible for those outside. Most of them are wearing extended reality headsets, some are looking at tablets.

All of them took the chance to experience a new dimension of oncology research at this year’s Ars Electronica in Linz: the XR Tumour Evolution Project (XRTEP) from Auckland, New Zealand, was part of the annual exhibition and opened its doors to those interested.

We talked to four researchers involved in the project: Dr. Ben Lawrence, medical oncologist and cancer genomics researcher, Dr. Tamsin Robb, cancer genomics researcher, Uwe Rieger, lead technical team XR design, and Yinan Liu, XR design. Present in spirit was the patient whose donation made the XRTEP possible.

How did the XR Tumour Evolution Project come into existence?

Ben Lawrence: I’ll start with the clinical point of view. As cancer moves around the body, it changes. Those changes affect the way cancer responds to treatment. Some treatments work well in one part of the cancer, but won’t work in another part. We know now that the cause for this is a change in the genomic profile of the cancer in different locations.

In our project, we had an opportunity to sequence multiple different metastases from within a single person with cancer and define that variation to understand how the cancer developed. When we got this initial data, it was lots of gene sequence information that was too complicated for us to understand. We needed a way to visualise this data so that we could get our heads around it.

The XR Tumour Evolution Project (right) next to a depiction of a traditional anatomical theatre at Leiden University, Netherlands, 1594

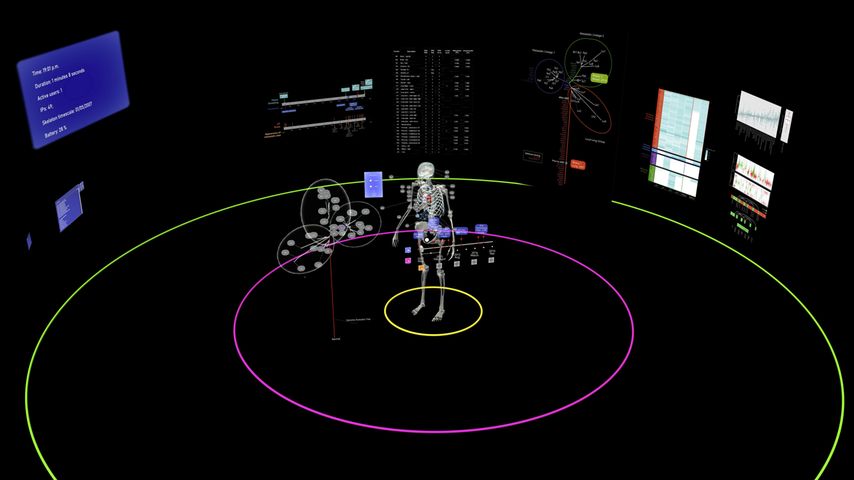

Tamsin Robb: So what we created was a way to bring together this information, including the aspect of time. The model is based on clinical CT scan data from across ten years of a patient’s clinical journey, represented three-dimensionally. Their genomic information is overlayed on their body model at the precise positioning of the biopsy site that it relates to. In this way, we can interpret the genomic data in light of the clinical information.

We are working at the intersection of various disciplines: mathematics, biology, pathology, radiology, oncology, and more. Extended reality is turning cancer research into a team sport.

Uwe Rieger: Ben Lawrence was the initiator of this project. He contacted the architecture school, where I am running a research laboratory which is called the “arc/sec Lab for Cyber-Physical Design”. We are looking into cyber-physical design. Our specialty is to develop new architectural, functional spaces by combining digital worlds with physical, traditional architecture.

What I want to underline is that what we have done in the XRTEP goes beyond what is typically perceived as being augmented or mixed reality, combining digital and physical tools, we have designed a new form of workspace for up to ten cancer researchers.

The space allows the researchers to operate in a collaborative mode to explore cancer through all types of media. The application can also be linked up to tablets, whiteboards and a large screen. We created a new type of laboratory, an optimised private arena, which interlinks cancer, space and time.

Yinan Liu: I was mainly working on the user interaction and the front end, but also helped to develop a stable back end. Both were needed in order to have a working tool for the researchers and oncologists. Interestingly, we weren’t primarily looking at it as a visualisation. The aspect of collaboration had priority.

What would people need in order to complete their tasks? What do we need to consider in order to build a hybrid work space for them? We also had to design within the limitations of the HoloLens headset. Our main goal was to enable the scientists to gain a quick understanding of the presented information in the most basic form.

”Collaboration” was one of the main themes?

Ben Lawrence: The knowledge needed to understand the way cancer spreads is not held by one person, one researcher or one doctor. The brains of multiple different people are needed. The architects created a space in which those knowledge holders could come together, look at the same data at the same time and share their ideas.

But this project was not only a collaboration between researchers, it was also a project in close collaboration with the patient and their family. The patient recognised the opportunity while they were alive and encouraged us to set up this project with data from their cancer after they died. Pathologists were able to remove samples of these cancers that could then be sequenced.

We have an ongoing relationship with the patient’s family. We consider them as partners in this research project and we continue to involve them in each step of the project. The project is a patient-led opportunity. This is important for the way we ran the project and for the values that we built into our work.

Uwe Rieger: When I say we are merging digital information with physical architectural construction, I am addressing this from the architectural perspective. The project is not just a pop-up with an extended-reality headset inside. All parts have been designed to go together. It hosts ten researchers, but it can also be an educational space to communicate concepts about cancer and tumour evolution.

How many hours of coding, planning and cooperative work went into the project?

Yinan Liu: Because we are using the platform “Unity”, the back end is not all hard-coded. The building blocks are actually not code-heavy at all. The computer technology is not as intense as one would assume. It has taken us two years to get to this point, but I would say it took this long because we are focusing on the usability and the design of the space.

So a lot of the times we were creating the central skeleton and then bringing everybody in, looking at what Tamsin or Ben might say, or what more they would want out of the information. There was a lot of back and forth in terms of the design of usability.

We also had to make sure that ten people will not get so crowded at any one hologram. That led to the decision to separate the layers of information through the immediate, through the regional, and then through the more global sort of two-dimensional information as well. This approach also included the multidevice communications – communication through new tools as well as the researchers’ usual tools.

Uwe Rieger: As far as I remember, Yinan spent about 1000 hours on the back end, and half of it went into the programming side of things. I believe it was another 800 hours spent by our collaborators at eResearch on programming.

Where do you see the project in five years?

Ben Lawrence: There are a few ways this project could continue on. One of the clinical opportunities lies in patient communication. When we are with patients and we explain to them where their cancer is in their bodies, with extended reality we will have a way to also show them, and to visualise whether and how the cancer has responded to treatment.

We will be able to better explain where their pain comes from or which passages are blocked by tumours, to let them see which parts of their tumours have shrunk and which have grown.

Tamsin Robb: Absolutely. Patients are undergoing such an emotionally demanding journey – if extended reality could help them to understand their medical information, that may be particularly valuable.

What I hope that we have also done is created a blueprint for what some clinical decision-making tools could look like in the future. This endeavour would be a substantial second, separate project. The natural clinical scenario where this vision fits is the molecular tumour board where you would typically have medical experts from a range of disciplines coming together to discuss a patient’s case. These experts try to make clinical decisions with all of the clinical information right in front of them.

With extended reality, they can be guided by visualised molecular information, like DNA sequencing of one or more tumours, on top of all of the more traditional technologies that we have to provide information about a patient.

Uwe Rieger: While XRTEP is a prototype that we have been developing for a specific condition, we are currently looking into how we could expand it to the next logical stage.

On the one hand, we want to expand this tool in a way that multiple people offsite can participate. Ideally, you don’t have to be locally present to take part. Because that is actually another advantage of the Metaverse technology: we are moving towards being able to share and discuss information three-dimensionally from different locations. You won’t need abstract tools to access and share information. It will be direct and tangible.

On the other hand, there are all types of medical scans used in cancer research. They are all three-dimensional, however, so far they are all represented in two-dimensional forms. So we are looking into different pipelines of how we can bring this information directly into the three-dimensional worlds that we are creating. Some types of medical scans might be rendered in 3D more accurately than others.

Yinan Liu: If you look at the development process, whether it’s HoloLens or Magic Leap, it is all moving towards a new era of spatial computing. And if we don’t just look at this technology as an entertainment headset, but if it could become – and I think it will – as popular or as common as mobile phones, it will drive the usability much more forward.

And of course, we also know that there’s been other medical investigations ever since the HoloLens came out. Since we can visualise things spatially, alot has happened. I definitely see that progress in medicine over the next five years.

Tamsin Robb: It is an exploding area of activity. There are a lot of projects underway that aren’t really advertised in the public domain. We have learned about projects for guiding surgery remotely to enable surgery to happen in rural regions where there aren’t specialists available. There are also many medical education tools in the works for training the medical workforce of the future.

It’s just a matter of finding the best opportunities to exploit this technology in the medical domain. We started the project thinking that our concept was really unusual, but we have realised that we have a lot of friends.

Factbox

A patient with inoperable cancer donated their tissue for an investigation into the progression of their disease. At the time of their passing, they had 89 separate tumours. Over half of these were genome-sequenced, yielding precise detail about how cancer had moved through their body.

The volume and complexity of the information obtained posed new challenges in representing data. Also at issue was shifting and strengthening relations between the various siloed disciplines working with that data to contribute to cancer research. The problem was visual, spatial, temporal, and social. This was adesign problem to be pursued in collaboration with spatial practitioners.

XR Tumour Evolution Project (XRTEP) is an immersive, extended-reality arena, experienced via Microsoft’s HoloLens 2. It draws on front-line architectural research, technology and practice in combining a new mode of human-computer interaction (where physical and digital components are interlinked) with traditional spatial-material practices.

The space is defined by a fabric-skinned, light-weight foldable structure 3 metres high by 8,5 metres in diameter. At its heart stands an interactive holographic model of the patient’s skeleton, organs, and tumours. It is a shared spatial-temporal index. Linked datasets are arranged in three concentric layers around the model. The disciplinary specificity of the data increases with distance from the model allowing moments of intra- or inter-disciplinary focus at different points in the space.

XRTEP brings together cancer experts and furnishes them with tools and data in space to enable real-time collaboration around how and why cancer spreads in the human body. Researchers watch tumours grow, shrink, and spread over time, discern patterns in the data, and connect them to their spatial origins.1,2

Source:

1 Designers Institute of New Zealand: Best Design Awards. University of Auckland, School of Architecture and Planning; Faculty of Medical and Health Sciences; and Centre for E-Rsearch XR Tumour Evolution Project. Online at https://bestawards.co.nz/value-of-design-award/university-of-auckland-school-of-architecture-1/xr-tumour-evolution-project/ . Accessed on 22.11.2022 2 Ars electronica 2022: Tumour Evolution in Extended Reality (XR). Acollaborative XR tool to examine tumour evolution. Online at https://www.ars.nz/tumour-evolution-in-extended-reality/ . Accessed on 22.11.2022

Das könnte Sie auch interessieren:

Erhaltungstherapie mit Atezolizumab nach adjuvanter Chemotherapie

Die zusätzliche adjuvante Gabe von Atezolizumab nach kompletter Resektion und adjuvanter Chemotherapie führte in der IMpower010-Studie zu einem signifikant verlängerten krankheitsfreien ...

Highlights zu Lymphomen

Assoc.Prof. Dr. Thomas Melchardt, PhD zu diesjährigen Highlights des ASCO und EHA im Bereich der Lymphome, darunter die Ergebnisse der Studien SHINE und ECHELON-1

Aktualisierte Ergebnisse für Blinatumomab bei neu diagnostizierten Patienten

Die Ergebnisse der D-ALBA-Studie bestätigen die Chemotherapie-freie Induktions- und Konsolidierungsstrategie bei erwachsenen Patienten mit Ph+ ALL. Mit einer 3-jährigen ...